For my current customer i'm in the process of migrating their application from Google App Engine Flexible (former Managed VMs) toward Kubernetes running on GKE. During extensive tests I concluded that although pod readinessProbes are optional, they should in fact be mandatory when having a Service connected.

What is a readinessProbe anyway?

Because your application is wrapped in a Docker container, running inside a kubernetes pod, allocated on a node there needs to be a mechanism to interact with it during its lifetime. Kubernetes currently provides two types of healthchecks to verify if your application is working as intended, LivenessProbes & ReadinessProbes. Both kinds of probes are the responsibility of the Kubelet agent.

- LivenessProbes are used determine that a pod needs to be restarted because its behavior is incorrect.

- ReadinessProbes are used to signal to the loadbalancer that a pod is not accepting workload at the moment.

A day in the life of your pod

Given a simple sample application (http webserver) running as a pod in your kubernetes cluster, receiving traffic through a Service. You have more than enough machines running with room for pods to scale if needs be. And today is the big day, you get slashdotted.

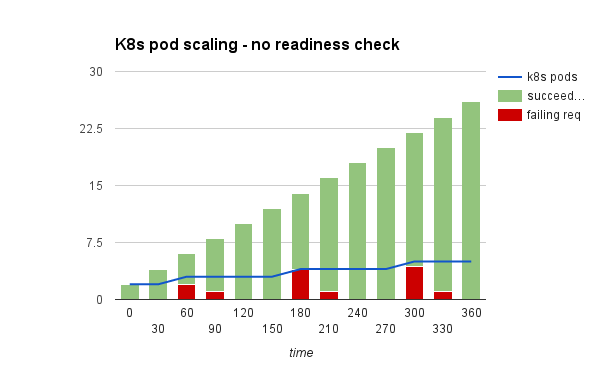

Without a readinessprobe defined, this might be how your traffic will look like:

This is due to the fact that your pod starts receiving load as soon as it is found to be in a Running state and Ready to process work.

From the documentation:

The default state of Readiness before the initial delay is Failure. The state of Readiness for a container when no probe is provided is assumed to be Success

Combine this with the pod phase:

Running: The pod has been bound to a node, and all of the containers have been created. At least one container is still running, or is in the process of starting or restarting.

Nothing in this verifies that your application is done with its initial setup and ready to start processing.

Lets fix it with readiness checks

Append a readinessProbe to the configuration of your pods:

containers:

- name: my-wordpress-application

image: my-wordpress-application:0.0.1

ports:

- containerPort: 80

readinessProbe:

httpGet:

# Path to probe; should be cheap, but representative of typical behavior

path: /index.html

port: 80

initialDelaySeconds: 5

timeoutSeconds: 1

This probe will wait 5 seconds before verifying that the actual application running in your container is ready to serve. If it fails it will retry in 10 seconds. Only when your application starts to respond to the HTTP get request with a 200-399 result code will the pod be added to the loadbalancer.

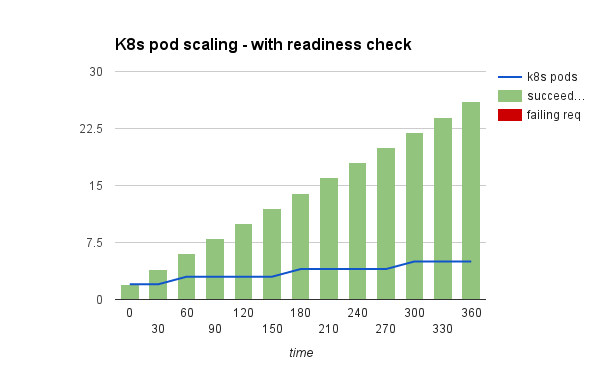

And lo and behold, your scaling behaviour will now look as follows:

This leads me to believe that when your pod is receiving inputs of some sorts you should always append a readinessProbe to prevent errors during the initial bootup of your application.